QCM and Distinguishing AI-generated Fakes from Reality

in News

Article by Ontonix

“Generative AI is a type of artificial intelligence technology that can produce various types of content including text, imagery, audio and synthetic data. The recent buzz around generative AI has been driven by the simplicity of new user interfaces for creating high-quality text, graphics and videos in a matter of seconds.

The technology, it should be noted, is not brand-new. Generative AI was introduced in the 1960s in chatbots. But it was not until 2014, with the introduction of generative adversarial networks, or GANs — a type of machine learning algorithm — that generative AI could create convincingly authentic images, videos and audio of real people.

On the one hand, this newfound capability has opened up opportunities that include better movie dubbing and rich educational content. It also unlocked concerns about deep fakes — digitally forged images or videos — and harmful cybersecurity attacks on businesses, including nefarious requests that realistically mimic an employee’s boss.” (source)

This is all very nice but technology capable of similar things is sparking concerns spanning ethics as well as moral and legal issues. Clearly, this is just the beginning. But is it possible to defend oneself from the proliferation of fakes? Fortunately Nature comes to the rescue. Nature disposes of unlimited resources and has physics on its side. It can create phenomena of amazing intricacy and depth, not to mention complexity. Whenever you imitate something, or model it, or emulate it, you can get very close to the real thing, and the differences may even be totally invisible to conventional observers or techniques. But the differences will be there.

What we have observed over nearly two decades of measuring complexity of all sorts of systems, including digital models of physical experiments, is that surrogates are almost always less complex than the real thing. This makes sense, even intuitively. Complexity is a measure of structured information, i.e. information that emanates from the fact that there is structure. In a surrogate, it is extremely difficult to reproduce or generate all the subtleties of such structure, especially in highly complex systems. Thanks to this principle, we are able to identify Balance Sheets that have been manipulated. It is easy to manipulate the arithmetic in a Balance Sheet, but doing so while preserving its underlying structure is something of a totally different magnitude.

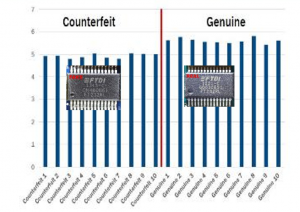

An example is from a recent project in which the goal was to distinguish counterfeit chips from genuine ones. QCM was extremely successful with a 99.95% success rate. Here too we found that fake chips were slightly less complex than the real ones, as shown in the figure below:

Other examples are as follows. Here we confront two sets of 10 chips in a single QCM pass. Once again, the fakes stand out very clearly, even if the differences are truly microscopic.

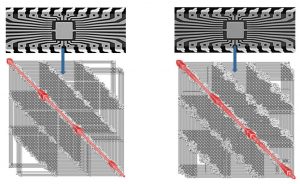

The reason for such a substantial degree of separation lies in the intricacies of the respective structures of the underlying Complexity Maps. The example below shows a fake (left) and a real chip (right) and the respective Complexity Maps generated from chip responses to a particular form of excitation. The maps are very similar, but they are not the same. And we can spot it.

But there is more than just the structure of complexity. Complexity has a very particular makeup. If a system has one hundred dimensions, each of these dimensions will have a particular footprint within that system. Here too, a fake will have a different complexity makeup from the real thing, providing yet another mechanism for distinguishing the two.

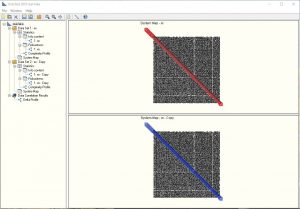

There is one more formidable QCM-based instrument when it comes to separating fakes from reality, and that is the dynamics of complexity. When a system evolves, so does its complexity. An example is shown below:

At the end of the day, if one wants a fake to be undetected, the following criteria should be satisfied:

- CRITERION 1: The complexity of the fake should be very close to that of the real thing.

- CRITERION 2: The Complexity Map should be very close to that of the genuine system one is mimicking.

- CRITERION 3: The dynamics of complexity should also be very close to that of the system in question.

While generative AI is proving to be very powerful it will be difficult for it to satisfy all three criteria, precisely because it fails to accomodate complexity. The one that is probably the easiest to satisfy is CRITERION 1, but when it comes to intricate structure it becomes immensely difficult to fake what is really going on. There is a special tool for doing this. It is called OntoTest and it uses the QCM algorithm to compare two systems.

Faking things – whether using generative AI or other tools – is going to proliferate in the near future and QCM technology has the potential to unmask high-quality fakes and provide scientific support in litigation cases.